|

Highlight

|

Poster

[ ExHall D ]

Abstract

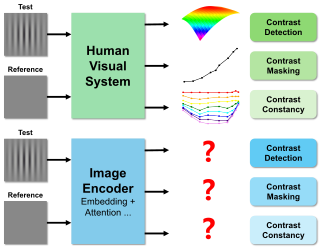

In recent years, the interpretability of Deep Neural Networks (DNNs) has garnered significant attention, particularly due to their widespread deployment in critical domains like healthcare, finance, and autonomous systems. To address the challenge of understanding how DNNs make decisions, Explainable AI (XAI) methods, such as saliency maps, have been developed to provide insights into the inner workings of these models. This paper introduces DiffCAM, a novel XAI method designed to overcome limitations in existing Class Activation Map (CAM)-based techniques, which often rely on decision boundary gradients to estimate feature importance. DiffCAM differentiates itself by considering the actual data distribution of the reference class, identifying feature importance based on how a target example differs from reference examples. This approach captures the most discriminative features without relying on decision boundaries or prediction results, making DiffCAM applicable to a broader range of models, including foundation models. Through extensive experiments, we demonstrate the superior performance and flexibility of DiffCAM in providing meaningful explanations across diverse datasets and scenarios.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

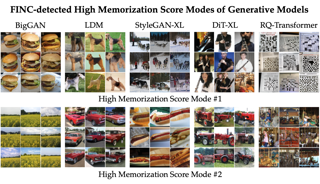

An interpretable comparison of generative models requires the identification of sample types produced differently by each of the involved models. While several quantitative scores have been proposed in the literature to rank different generative models, such score-based evaluations do not reveal the nuanced differences between the generative models in capturing various sample types. In this work, we attempt to solve a differential clustering problem to detect sample types expressed differently by two generative models. To solve the differential clustering problem, we propose a method called Fourier-based Identification of Novel Clusters (FINC) to identify modes produced by a generative model with a higher frequency in comparison to a reference distribution. FINC provides a scalable stochastic algorithm based on random Fourier features to estimate the eigenspace of kernel covariance matrices of two generative models and utilize the principal eigendirections to detect the sample types present more dominantly in each model. We demonstrate the application of the FINC method to large-scale computer vision datasets and generative model frameworks. Our numerical results suggest the scalability of the developed Fourier-based method in highlighting the sample types produced with different frequencies by widely-used generative models.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

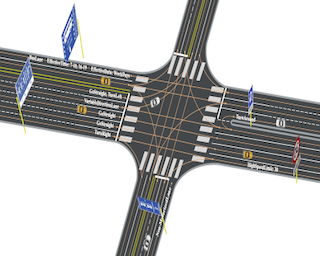

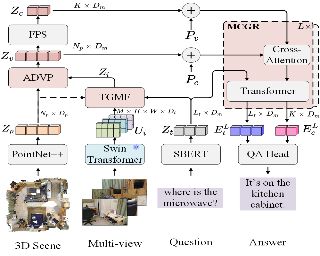

Autonomous driving visual question answering (AD-VQA) aims to answer questions related to perception, prediction, and planning based on given driving scene images, heavily relying on the model's spatial perception capabilities.Previous works typically express spatial comprehension through textual representations of spatial coordinates, resulting in semantic gaps between visual coordinate representations and textual descriptions.This oversight hinders the accurate transmission of spatial information and increases the expressive burden.To address this, we propose Marker-based Prompt Learning framework (MPDrive), which transforms spatial coordinates into concise visual markers, ensuring linguistic consistency and enhancing the accuracy of visual perception and spatial expression in AD-VQA.Specifically, MPDrive converts complex spatial coordinates into text-based visual marker predictions, simplifying the expression of spatial information for autonomous decision-making.Moreover, we introduce visual marker images as conditional inputs and integrate object-level fine-grained features to further enhance multi-level spatial perception abilities.Extensive experiments on the DriveLM and CODA-LM datasets show that MPDrive performs at state-of-the-art levels, particularly in cases requiring sophisticated spatial understanding.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

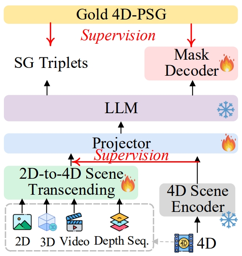

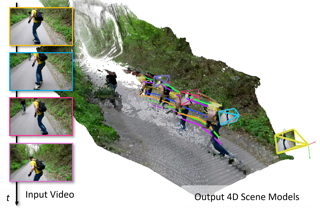

The latest emerged 4D Panoptic Scene Graph (4D-PSG) provides an advanced-ever representation for comprehensively modeling the dynamic 4D visual real world. Unfortunately, current pioneering 4D-PSG research can largely suffer from data scarcity issues severely, as well as the resulting out-of-vocabulary problems; also, the pipeline nature of the benchmark generation method can lead to suboptimal performance. To address these challenges, this paper investigates a novel framework for 4D-PSG generation that leverages rich 2D visual scene annotations to enhance 4D scene learning. First, we introduce a 4D Large Language Model (4D-LLM) integrated with a 3D mask decoder for end-to-end generation of 4D-PSG. A chained SG inference mechanism is further designed to exploit LLMs' open-vocabulary capabilities to infer accurate and comprehensive object and relation labels iteratively. Most importantly, we propose a 2D-to-4D visual scene transfer learning framework, where a spatial-temporal scene transcending strategy effectively transfers dimension-invariant features from abundant 2D SG annotations to 4D scenes, effectively compensating for data scarcity in 4D-PSG. Extensive experiments on the benchmark data demonstrate that we strikingly outperform baseline models by an average of 14.62%, highlighting the effectiveness of our method.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

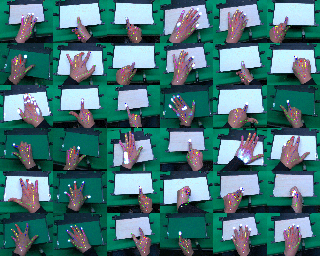

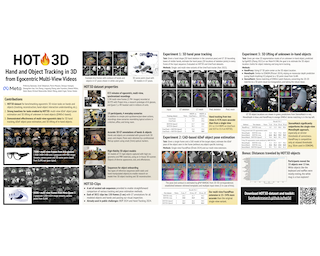

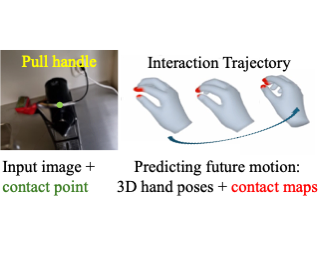

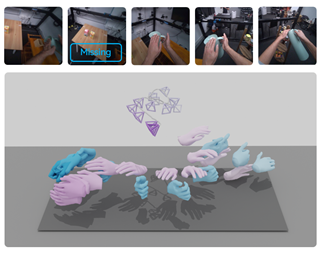

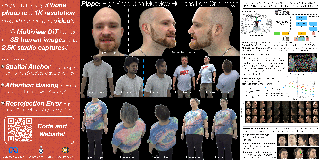

Estimating touch contact and pressure in egocentric vision is a central task for downstream applications in Augmented Reality, Virtual Reality, as well as many robotic applications, because it provides precise physical insights into hand-object interaction and object manipulation. However, existing contact pressure datasets lack egocentric views and hand poses, which are essential for accurate estimation during in-situ operation, both for AR/VR interaction and robotic manipulation.In this paper, we introduce a novel dataset of touch contact and pressure interaction from an egocentric perspective, complemented with hand pose meshes and fine-grained pressure intensities for each contact. The hand poses in our dataset are optimized using our proposed multi-view sequence-based method that processes footage from our capture rig of 8 accurately calibrated RGBD cameras. comprises 5.0 hours of touch contact and pressure interaction from 21 participants captured by a moving egocentric camera and 7 stationary Kinect cameras, which provided RGB images and depth maps at 30 Hz. In addition, we provide baselines for estimating pressure with different modalities, which will enable future developments and benchmarking on the dataset. Overall, we demonstrate that pressure and hand poses are complementary, which supports our intention to better facilitate the physical understanding of hand-object interactions in AR/VR …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

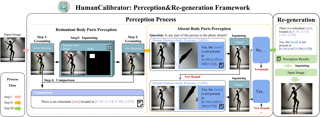

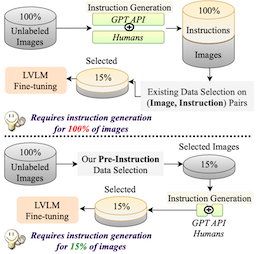

Traditional feedback learning for hallucination reduction relies on labor-intensive manual labeling or expensive proprietary models.This leaves the community without foundational knowledge about how to build high-quality feedback with open-source MLLMs.In this work, we introduce RLAIF-V, a novel framework that aligns MLLMs in a fully open-source paradigm. RLAIF-V maximally explores open-source MLLMs from two perspectives, including high-quality feedback data generation for preference learning and self-feedback guidance for inference-time scaling.Extensive experiments on seven benchmarks in both automatic and human evaluation show that RLAIF-V substantially enhances the trustworthiness of models at both preference learning and inference time. RLAIF-V 7B reduces object hallucination by 80.7\% and overall hallucination by 33.7\%. Remarkably, RLAIF-V 12B further reveals the self-alignment potential of open-source MLLMs, where the model can learn from feedback of itself to achieve super GPT-4V trustworthiness.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

Rolling shutter (RS) cameras dominate consumer and smartphone markets. Several methods for computing the absolute pose of RS cameras have appeared in the last 20 years, but the relative pose problem has not been fully solved yet. We provide a unified theory for the important class of order-one rolling shutter (RS$_1$) cameras. These cameras generalize the perspective projection to RS cameras, projecting a generic space point to exactly one image point via a rational map. We introduce a new back-projection RS camera model, characterize RS$_1$ cameras, construct explicit parameterizations of such cameras, and determine the image of a space line. We classify all minimal problems for solving the relative camera pose problem with linear RS$_1$ cameras and discover new practical cases. Finally, we show how the theory can be used to explain RS models previously used for absolute pose computation.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

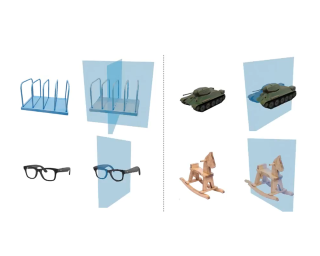

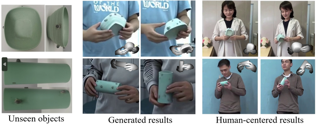

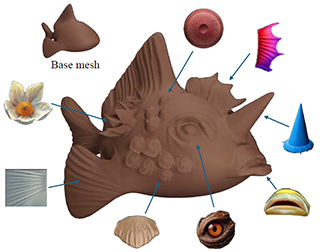

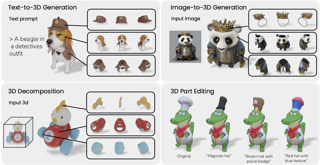

Controllable generation has achieved substantial progress in both 2D and 3D domains, yet current conditional generation methods still face limitations in describing detailed shape structures. Skeletons can effectively represent and describe object anatomy and pose. Unfortunately, past studies are often limited to human skeletons. In this work, we generalize skeletal conditioned generation to arbitrary structures. First, we design a reliable mesh skeletonization pipeline to generate a large-scale mesh-skeleton paired dataset.Based on the dataset, a multi-view and 3D generation pipeline is built. We propose to represent 3D skeletons by Coordinate Color Encoding as 2D conditional images. A Skeletal Correlation Module is designed to extract global skeletal features for condition injection. After multi-view images are generated, 3D assets can be obtained by incorporating a large reconstruction model, followed with a UV texture refinement stage. As a result, our method achieves instant generation of multi-view and 3D contents which are aligned with given skeletons. The proposed techniques largely improve the object-skeleton alignment and generation quality.Project page at https://skdream3d.github.io/. Dataset, code and models will be released in public.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

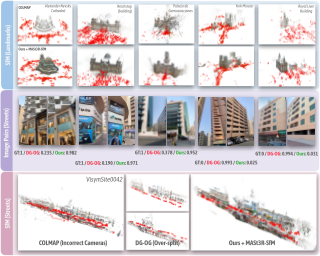

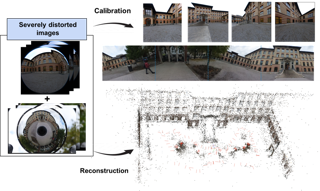

Accurate 3D reconstruction is frequently hindered by visual aliasing, where visually similar but distinct surfaces (aka, doppelgangers), are incorrectly matched. These spurious matches distort the structure-from-motion (SfM) process, leading to misplaced model elements and reduced accuracy. Prior efforts addressed this with CNN classifiers trained on curated datasets, but these approaches struggle to generalize across diverse real-world scenes and can require extensive parameter tuning. In this work, we present Doppelgangers++, a method to enhance doppelganger detection and improve 3D reconstruction accuracy. Our contributions include a diversified training dataset that incorporates geo-tagged images from everyday scenes to expand robustness beyond landmark-based datasets. We further propose a Transformer-based classifier that leverages 3D-aware features from the MASt3R model, achieving superior precision and recall across both in-domain and out-of-domain tests. Doppelgangers++ integrates seamlessly into standard SfM and MASt3R-SfM pipelines, offering efficiency and adaptability across varied scenes. To evaluate SfM accuracy, we introduce an automated, geotag-based method for validating reconstructed models, eliminating the need for manual inspection. Through extensive experiments, we demonstrate that Doppelgangers++ significantly enhances pairwise visual disambiguation and improves 3D reconstruction quality in complex and diverse scenarios.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

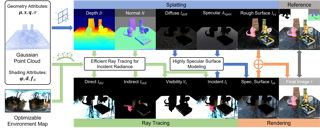

The increasing demand for high-quality 3D assets across various industries necessitates efficient and automated 3D content creation. Despite recent advancements in 3D generative models, existing methods still face challenges with optimization speed, geometric fidelity, and the lack of assets for physically based rendering (PBR). In this paper, we introduce 3DTopia-XL, a scalable native 3D generative model designed to overcome these limitations. 3DTopia-XL leverages a novel primitive-based 3D representation, PrimX, which encodes detailed shape, albedo, and material field into a compact tensorial format, facilitating the modeling of high-resolution geometry with PBR assets. On top of the novel representation, we propose a generative framework based on Diffusion Transformer (DiT), which comprises 1) Primitive Patch Compression, 2) and Latent Primitive Diffusion. 3DTopia-XL learns to generate high-quality 3D assets from textual or visual inputs. Extensive qualitative and quantitative experiments are conducted to demonstrate that 3DTopia-XL significantly outperforms existing methods in generating high-quality 3D assets with fine-grained textures and materials, efficiently bridging the quality gap between generative models and real-world applications.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

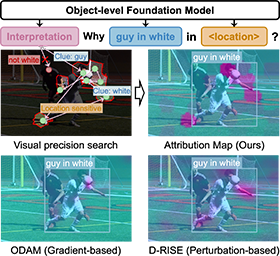

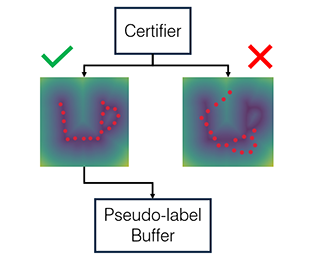

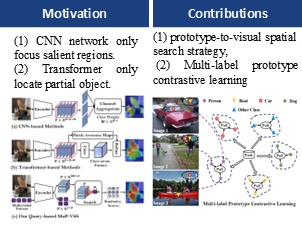

Advances in multimodal pre-training have propelled object-level foundation models, such as Grounding DINO and Florence-2, in tasks like visual grounding and object detection. However, interpreting these models’ decisions has grown increasingly challenging. Existing interpretable attribution methods for object-level task interpretation have notable limitations: (1) gradient-based methods lack precise localization due to visual-textual fusion in foundation models, and (2) perturbation-based methods produce noisy saliency maps, limiting fine-grained interpretability. To address these, we propose a Visual Precision Search method that generates accurate attribution maps with fewer regions. Our method bypasses internal model parameters to overcome attribution issues from multimodal fusion, dividing inputs into sparse sub-regions and using consistency and collaboration scores to accurately identify critical decision-making regions. We also conducted a theoretical analysis of the boundary guarantees and scope of applicability of our method. Experiments on RefCOCO, MS COCO, and LVIS show our approach enhances object-level task interpretability over SOTA for Grounding DINO and Florence-2 across various evaluation metrics, with faithfulness gains of 23.7\%, 31.6\%, and 20.1\% on MS COCO, LVIS, and RefCOCO for Grounding DINO, and 102.9\% and 66.9\% on MS COCO and RefCOCO for Florence-2. Additionally, our method can interpret failures in visual grounding and object detection tasks, surpassing existing …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

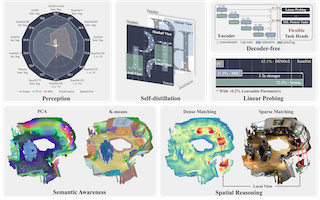

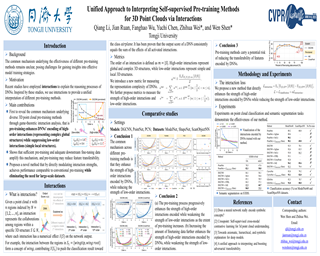

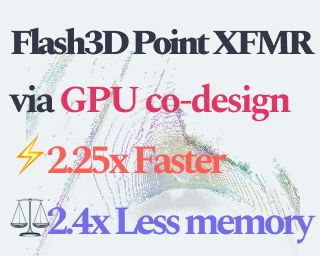

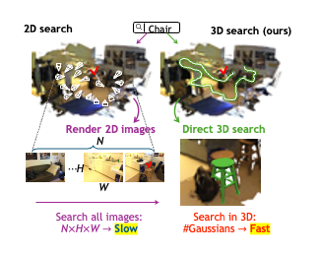

In this paper, we question whether we have a reliable self-supervised point cloud model that can be used for diverse 3D tasks via simple linear probing, even with limited data and minimal computation. We find that existing 3D self-supervised learning approaches fall short when evaluated on representation quality through linear probing. We hypothesize that this is due to what we term the geometric shortcut, which causes representations to collapse to low-level spatial features. This challenge is unique to 3D and arises from the sparse nature of point cloud data. We address it through two key strategies: obscuring spatial information and enhancing the reliance on input features, ultimately composing a Sonata of 140k point clouds through self-distillation. Sonata is simple and intuitive, yet its learned representations are strong and reliable: zero-shot visualizations demonstrate semantic grouping, alongside strong spatial reasoning through nearest-neighbor relationships. Sonata demonstrates exceptional parameter and data efficiency, tripling linear probing accuracy (from 21.8% to 72.5%) on ScanNet and nearly doubling performance with only 1% of the data compared to previous approaches. Full fine-tuning further advances SOTA across both 3D indoor and outdoor perception tasks. All code and weights will be made available.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

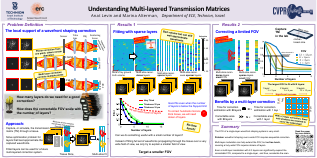

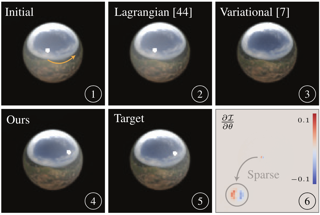

Transmission matrices, mapping the propagation of light from one end of the tissue to the other, form an important mathematical tool in the analysis of tissue scattering and the design of wavefront shaping systems. To understand the relationship between their content and the volumetric structure of the tissue, we wish to fit them with multi-slice models, composed of a set of planar aberrations spaced throughout the volume. The number of layers used in such a model would largely affect the amount of information compression and the ease in which we can use such layered models in a wavefront-shaping system. This work offers a theoretical study of such multi-layered models. We attempt to understand how many layers are required for a good fit, and how does the approximation degrade when a smaller number of such layers is used. We show analytically that transmission matrices can be well fitted with very sparse layers. This leads to optimistic predictions on our ability to use them to design future wavefront shaping systems which can correct tissue aberration over a wide field-of-view.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

This paper introduces a novel approach to improve camera position estimation in global Structure-from-Motion (SfM) frameworks by filtering inaccurate pose graph edges, representing relative translation estimates, before applying translation averaging. In SfM, pose graph vertices represent cameras and edges relative poses (rotation and translation) between cameras. We formulate the edge filtering problem as a vertex filtering in the dual graph - a line graph where the vertices stem from edges in the original graph, and the edges from cameras. Exploiting such a representation, we frame the problem as a binary classification over nodes in the dual graph. To learn such a classification and find outlier edges, we employ a Transformer architecture-based technique. To address the challenge of memory overflow often caused by converting to a line graph, we introduce a clustering-based graph processing approach, enabling the application of our method to arbitrarily large pose graphs. The proposed method outperforms existing relative translation filtering techniques in terms of final camera position accuracy and can be seamlessly integrated with any other filter. The code will be made public.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

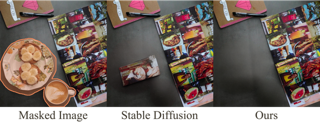

Recent advances in image inpainting increasingly use generative models to handle large irregular masks. However, these models can create unrealistic inpainted images due to two main issues: (1) Context Instability: Even with unmasked areas as context, generative models may still generate arbitrary objects in the masked region that don’t align with the rest of the image. (2) Hue Inconsistency: Inpainted regions often have color shifts that causes a smeared appearance, reducing image quality.Retraining the generative model could help solve these issues, but it’s costly since state-of-the-art latent-based diffusion and rectified flow models require a three-stage training process: training a VAE, training a generative U-Net or transformer, and fine-tuning for inpainting.Instead, this paper proposes a post-processing approach, dubbed as ASUKA (Aligned Stable inpainting with UnKnown Areas prior), to improve inpainting models. To address context instability, we leverage a Masked Auto-Encoder (MAE) for reconstruction-based priors. This strengthens context alignment while maintaining the model's generation capabilities. To address hue inconsistency, we propose a specialized VAE decoder that treats latent-to-image decoding as a local harmonization task, significantly reducing color shifts for hue-consistent inpainting. We validate ASUKA on SD 1.5 and FLUX inpainting variants using the Places2 benchmark and MISATO, our proposed diverse collection of …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

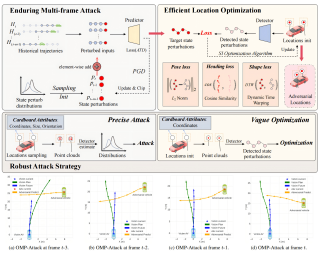

Trajectory prediction plays a crucial role in autonomous driving systems, and exploring its vulnerability has garnered widespread attention. However, existing trajectory prediction attack methods often rely on single-point attacks to make efficient perturbations. This limits their applications in real-world scenarios due to the transient nature of single-point attacks, their susceptibility to filtration, and the uncertainty regarding the deployment environment. To address these challenges, this paper proposes a novel LiDAR-induced attack framework to impose multi-frame attacks by optimization-driven adversarial location search, achieving endurance, efficiency, and robustness. This framework strategically places objects near the adversarial vehicle to implement an attack and introduces three key innovations. First, successive state perturbations are generated using a multi-frame single-point attack strategy, effectively misleading trajectory predictions over extended time horizons. Second, we efficiently optimize adversarial objects' locations through three specialized loss functions to achieve desired perturbations. Lastly, we improve robustness by treating the adversarial object as a point without size constraints during the location search phase and reduce dependence on both the specific attack point and the adversarial object's properties. Extensive experiments confirm the superior performance and robustness of our framework.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

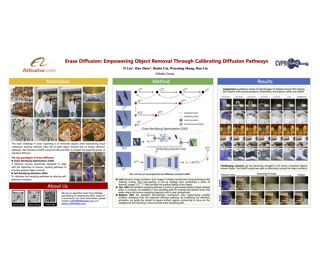

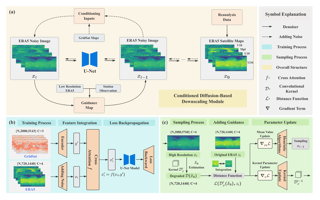

Erase inpainting, or object removal, aims to precisely remove target objects within masked regions while preserving the overall consistency of the surrounding content. Despite diffusion-based methods have made significant strides in the field of image inpainting, challenges remain regarding the emergence of unexpected objects or artifacts. We assert that the inexact diffusion pathways established by existing standard optimization paradigms constrain the efficacy of object removal. To tackle these challenges, we propose a novel Erase Diffusion, termed EraDiff, aimed at unleashing the potential power of standard diffusion in the context of object removal. In contrast to standard diffusion, the EraDiff adapts both the optimization paradigm and the network to improve the coherence and elimination of the erasure results.We first introduce a Chain-Rectifying Optimization (CRO) paradigm, a sophisticated diffusion process specifically designed to align with the objectives of erasure. This paradigm establishes innovative diffusion transition pathways that simulate the gradual elimination of objects during optimization, allowing the model to accurately capture the intent of object removal. Furthermore, to mitigate deviations caused by artifacts during the sampling pathways, we develop a simple yet effective Self-Rectifying Attention (SRA) mechanism. The SRA calibrates the sampling pathways by altering self-attention activation, allowing the model to effectively …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

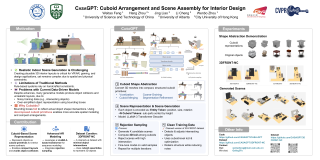

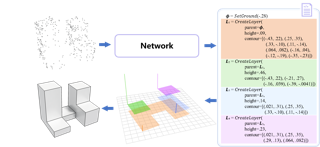

We present a novel approach for indoor scene synthesis, which learns to arrange decomposed cuboid primitives to represent 3D objects within a scene. Unlike conventional methods that use bounding boxes to determine the placement and scale of 3D objects, our approach leverages cuboids as a straightforward yet highly effective alternative for modeling objects. This allows for compact scene generation while minimizing object intersections. Our approach, coined CASAGPT for Cuboid Arrangement and Scene Assembly, employs an autoregressive model to sequentially arrange cuboids, producing physically plausible scenes. By applying rejection sampling during the fine-tuning stage to filter out scenes with object collisions, our model further reduces intersections and enhances scene quality. Additionally, we introduce a refined dataset, 3DFRONT-NC, which eliminates significant noise presented in the original dataset, 3D-FRONT. Extensive experiments on the 3D-FRONT dataset as well as our dataset demonstrate that our approach consistently outperforms the state-of-the-art methods, enhancing the realism of generated scenes, and providing a promising direction for 3D scene synthesis.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

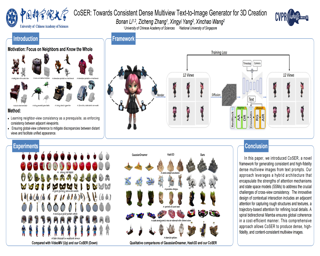

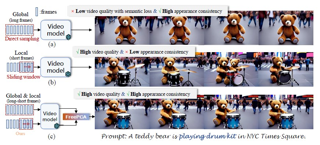

Generating dense multiview images from text prompts is crucial for creating high-fidelity 3D assets. Nevertheless, existing methods struggle with space-view correspondences, resulting in sparse and low-quality outputs. In this paper, we introduce CoSER, a novel consistent dense Multiview Text-to-Image Generator for Text-to-3D, achieving both efficiency and quality by meticulously learning neighbor-view coherence and further alleviating ambiguity through the swift traversal of all views. For achieving neighbor-view consistency, each viewpoint densely interacts with adjacent viewpoints to perceive the global spatial structure, and aggregates information along motion paths explicitly defined by physical principles to refine details. To further enhance cross-view consistency and alleviate content drift, CoSER rapidly scan all views in spiral bidirectional manner to aware holistic information and then scores each point based on semantic material. Subsequently, we conduct weighted down-sampling along the spatial dimension based on scores, thereby facilitating prominent information fusion across all views with lightweight computation. Technically, the core module is built by integrating the attention mechanism with a selective state space model, exploiting the robust learning capabilities of the former and the low overhead of the latter. Extensive evaluation shows that CoSER is capable of producing dense, high-fidelity, content-consistent multiview images that can be flexibly integrated into …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

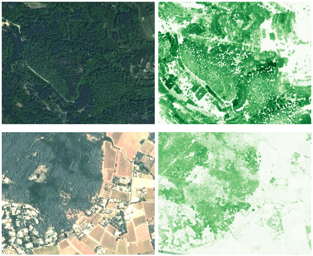

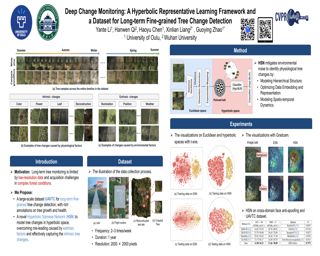

Estimating canopy height and its changes at meter resolution from satellite imagery is a significant challenge in computer vision with critical environmental applications.However, the lack of open-access datasets at this resolution hinders the reproducibility and evaluation of models. We introduce Open-Canopy, the first open-access, country-scale benchmark for very high-resolution (1.5 m) canopy height estimation, covering over 87,000 km² across France with 1.5 m resolution satellite imagery and aerial LiDAR data.Additionally, we present Open-Canopy-∆, a benchmark for canopy height change detection between images from different years at tree level—a challenging task for current computer vision models. We evaluate state-of-the-art architectures on these benchmarks, highlighting significant challenges and opportunities for improvement. Our datasets and code are publicly available at [URL].

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

Human pose plays a crucial role in the digital age. While recent works have achieved impressive progress in understanding and generating human poses, they often support only a single modality of control signals and operate in isolation, limiting their application in real-world scenarios. This paper presents UniPose, a framework employing Large Language Models (LLMs) to comprehend, generate, and edit human poses across various modalities, including images, text, and 3D SMPL poses. Specifically, we apply a pose tokenizer to convert 3D poses into discrete pose tokens, enabling seamless integration into the LLM within a unified vocabulary. To further enhance the fine-grained pose perception capabilities, we facilitate UniPose with a mixture of visual encoders, among them a pose-specific visual encoder. Benefiting from a unified learning strategy, UniPose effectively transfers knowledge across different pose-relevant tasks, adapts to unseen tasks, and exhibits extended capabilities. This work serves as the first attempt at building a general-purpose framework for pose comprehension, generation, and editing. Extensive experiments highlight UniPose's competitive and even superior performance across various pose-relevant tasks.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

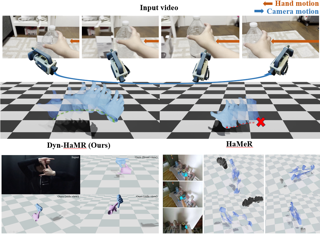

We propose Dyn-HaMR, to the best of our knowledge, the first approach to reconstruct 4D global hand motion from monocular videos recorded by dynamic cameras in the wild. Reconstructing accurate 3D hand meshes from monocular videos is a crucial task for understanding human behaviour, with significant applications in augmented and virtual reality (AR/VR). However, existing methods for monocular hand reconstruction typically rely on a weak perspective camera model, which simulates hand motion within a limited camera frustum. As a result, these approaches struggle to recover the full 3D global trajectory and often produce noisy or incorrect depth estimations, particularly when the video is captured by dynamic or moving cameras, which is common in egocentric scenarios. Our \name~consists of a multi-stage, multi-objective optimization pipeline, that factors in (i) simultaneous localization and mapping (SLAM) to robustly estimate relative camera motion, (ii) an interacting-hand prior for generative infilling and to refine the interaction dynamics, ensuring plausible recovery under (self-)occlusions, and (iii) hierarchical initialization through a combination of state-of-the-art hand tracking methods.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

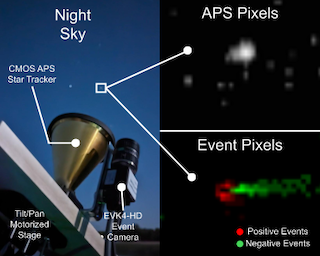

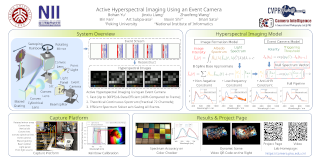

Event-based sensors (EBS) are a promising new technology for star tracking due to their low latency and power efficiency, but prior work has thus far been evaluated exclusively in simulation with simplified signal models. We propose a novel algorithm for event-based star tracking, grounded in an analysis of the EBS circuit and an extended Kalman filter (EKF). We quantitatively evaluate our method using real night sky data, comparing its results with those from a space-ready active-pixel sensor (APS) star tracker. We demonstrate that our method is an order-of-magnitude more accurate than existing methods due to improved signal modeling and state estimation, while providing more frequent updates and greater motion tolerance than conventional APS trackers. We provide all code and the first dataset of events synchronized with APS solutions.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

Generating 360-degree panoramas from narrow field of view (NFoV) image is a promising computer vision task for Virtual Reality (VR) applications. Existing methods mostly assess the generated panoramas with InceptionNet or CLIP based metrics, which tend to perceive the image quality and is $\textbf{not suitable for evaluating the distortion}$. In this work, we first propose a distortion-specific CLIP, named Distort-CLIP to accurately evaluate the panorama distortion and discover the $\textbf{``visual cheating''}$ phenomenon in previous works (i.e., tending to improve the visual results by sacrificing distortion accuracy). This phenomenon arises because prior methods employ a single network to learn the distinct panorama distortion and content completion at once, which leads the model to prioritize optimizing the latter. To address the phenomenon, we propose $\textbf{PanoDecouple}$, a decoupled diffusion model framework, which decouples the panorama generation into distortion guidance and content completion, aiming to generate panoramas with both accurate distortion and visual appeal. Specifically, we design a DistortNet for distortion guidance by imposing panorama-specific distortion prior and a modified condition registration mechanism; and a ContentNet for content completion by imposing perspective image information. Additionally, a distortion correction loss function with Distort-CLIP is introduced to constrain the distortion explicitly. The extensive experiments validate that …

|

|

Highlight

|

Poster

[ ExHall D ]

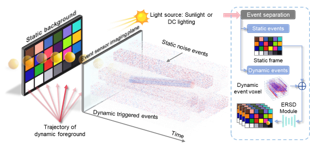

Abstract

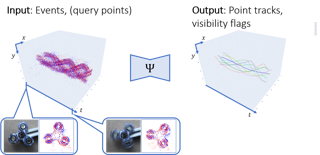

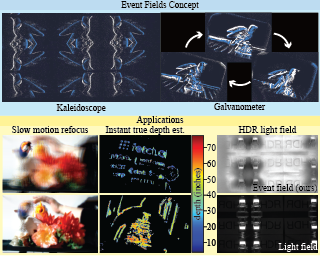

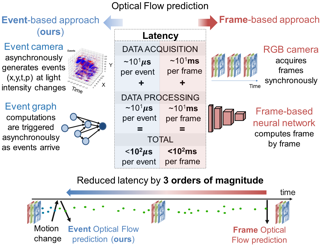

Tracking any point (TAP) recently shifted the motion estimation paradigm from focusing on individual salient points with local templates to tracking arbitrary points with global image contexts. However, while research has mostly focused on driving the accuracy of models in nominal settings, addressing scenarios with difficult lighting conditions and high-speed motions remains out of reach due to the limitations of the sensor. This work addresses this challenge with the first event camera-based TAP method. It leverages the high temporal resolution and high dynamic range of event cameras for robust high-speed tracking, and the global contexts in TAP methods to handle asynchronous and sparse event measurements. We further extend the TAP framework to handle event feature variations induced by motion - thereby addressing an open challenge in purely event-based tracking - with a novel feature alignment loss which ensures the learning of motion-robust features. Our method is trained with data from a new data generation pipeline and systematically ablated across all design decisions. Our method shows strong cross-dataset generalization and performs 135% better on the average Jaccard metric than the baselines. Moreover, on an established feature tracking benchmark, it achieves a 19% improvement over the previous best event-only method and even …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

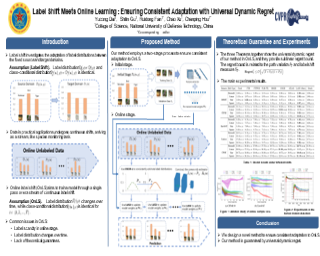

Label shift, which investigates the adaptation of label distributions between the fixed source and target domains, has attracted significant research interests and broad applications in offline settings. In real-world scenarios, however, data often arrives as a continuous stream. Addressing label shift in online learning settings is paramount. Existing strategies, which tailor traditional offline label shift techniques to online settings, have degraded performance due to the inconsistent estimation of label distributions and violation of convex assumption for theoretical guarantee. In this paper, we propose a novel method to ensure consistent adaptation to online label shift. We construct a new convex risk estimator that is pivotal for both online optimization and theoretical analysis. Furthermore, we enhance an optimistic online algorithm as the base learner and refine the classifier using an ensemble method. Theoretically, we derive a universal dynamic regret which achieves minimax optimal. Extensive experiments on both real-world datasets and human motion task demonstrate the superiority of our method comparing existing methods.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

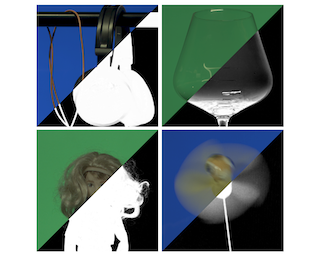

This paper considers the long-standing problem of extracting alpha mattes from video using a known background. While various color-based or polarization-based approaches have been studied in past decades, the problem remains ill-posed because the solutions solely rely on either color or polarization. We introduce Polarized Color Screen Matting, a single-shot, per-pixel matting theory for alpha matte and foreground color recovery using both color and polarization cues. Through a theoretical analysis of our diffuse-specular polarimetric compositing equation, we derive practical closed-form matting methods with their solvability conditions. Our theory concludes that an alpha matte can be extracted without manual corrections using off-the-shelf equipment such as an LCD monitor, polarization camera, and unpolarized lights with calibrated color. Experiments on synthetic and real-world datasets verify the validity of our theory and show the capability of our matting methods on real videos with quantitative and qualitative comparisons to color-based and polarization-based matting methods.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

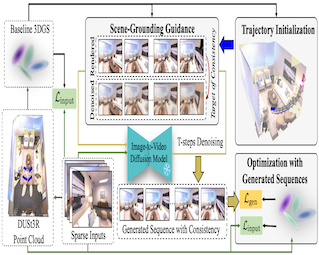

Despite recent successes in novel view synthesis using 3D Gaussian Splatting (3DGS), modeling scenes with sparse inputs remains a challenge. In this work, we address two critical yet overlooked issues in real-world sparse-input modeling: extrapolation and occlusion. To tackle these issues, we propose to use a reconstruction by generation pipeline that leverages learned priors from video diffusion models to provide plausible interpretations for regions outside the field of view or occluded. However, the generated sequences exhibit inconsistencies that do not fully benefit subsequent 3DGS modeling. To address the challenge of inconsistency, we introduce a novel scene-grounding guidance based on rendered sequences from an optimized 3DGS, which tames the diffusion model to generate consistent sequences. This guidance is training-free and does not require any fine-tuning of the diffusion model. To facilitate holistic scene modeling, we also propose a trajectory initialization method. It effectively identifies regions that are outside the field of view and occluded. We further design a scheme tailored for 3DGS optimization with generated sequences. Experiments demonstrate that our method significantly improves upon the baseline and achieves state-of-the-art performance on challenging benchmarks.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

Achieving realistic simulations of humans engaging in a wide range of object interactions has long been a fundamental goal in animation. Extending physics-based motion imitation techniques to complex human-object interactions (HOIs) is particularly challenging due to the intricate coupling between human-object dynamics and the variability in object geometries and properties. Moreover, motion capture data often contain artifacts such as inaccurate contacts and insufficient hand details, which hinder the learning process. We introduce InterMimic, a framework that overcomes these challenges by enabling a single policy to robustly learn from imperfect motion capture sequences encompassing tens of hours of diverse full-body interaction skills with dynamic and varied objects. Our key insight is employing a curriculum strategy: perfecting first, then scaling up. We first train subject-specific teacher policies to mimic, retarget, and refine the motion capture data, effectively correcting imperfections. Then, we distill a student policy from these teachers; the teachers act as online experts providing direct supervision and supplying clean references. This ensures that the student policy learns from high-quality guidance despite imperfections in the original dataset. Our experiments demonstrate that InterMimic produces realistic and diverse interactions across various HOI datasets. Notably, the learned policy exhibits zero-shot generalization, allowing seamless integration with …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

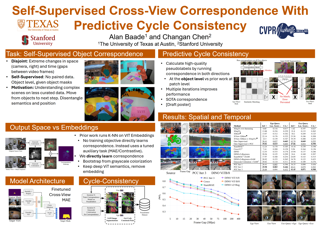

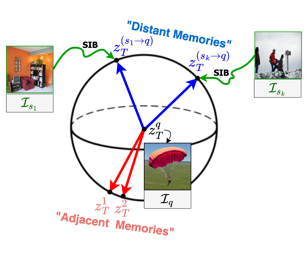

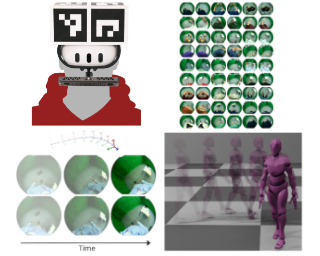

Learning self-supervised visual correspondence is a long-studied task fundamental to visual understanding and human perception. However, existing correspondence methods largely focus on small image transformations, such as object tracking in high-framerate videos or learning pixel-to-pixel mappings between images with high view overlap. This severely limits their application in dynamic multi-view settings such as robot imitation learning or augmented reality. In this work, we introduce Predictive Cycle Consistency for learning object correspondence between extremely disjoint views of a scene without paired segmentation data. Our technique bootstraps object correspondence pseudolabels from raw image segmentations using conditional grayscale colorization and a cycle-consistency refinement prior. We then train deep ViTs on these pseudolabels, which we use to generate higher-quality pseudolabels and iteratively train better correspondence models. We demonstrate the performance of our method under both extreme in-the-wild camera view changes and across large temporal gaps in video. Our approach beats all prior supervised and prior SoTA self-supervised correspondence models on the EgoExo4D correspondence benchmark (+6.7 IoU Exo Query) and the prior SoTA self-supervised methods SiamMAE and DINO V1&V2 on the DAVIS-2017 and LVOS datasets across large frame gaps.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

Personalized 3D avatar editing holds significant promise due to its user-friendliness and availability to applications such as AR/VR and virtual try-ons. Previous studies have explored the feasibility of 3D editing, but often struggle to generate visually pleasing results, possibly due to the unstable representation learning under mixed optimization of geometry and texture in complicated reconstructed scenarios. In this paper, we aim to provide an accessible solution for ordinary users to create their editable 3D avatars with precise region localization, geometric adaptability, and photorealistic renderings. To tackle this challenge, we introduce a meticulously designed framework that decouples the editing process into local spatial adaptation and realistic appearance learning, utilizing a hybrid Tetrahedron-constrained Gaussian Splatting (TetGS) as the underlying representation. TetGS combines the controllable explicit structure of tetrahedral grids with the high-precision rendering capabilities of 3D Gaussian Splatting and is optimized in a progressive manner comprising three stages: 3D avatar instantiation from real-world monocular videos to provide accurate priors for TetGS initialization; localized spatial adaptation with explicitly partitioned tetrahedrons to guide the redistribution of Gaussian kernels; and geometry-based appearance generation with a coarse-to-fine activation strategy. Both qualitative and quantitative experiments demonstrate the effectiveness and superiority of our approach in generating photorealistic 3D …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

We propose 4Real-Video, a novel framework for generating 4D videos, organized as a grid of video frames with both time and viewpoint axes. In this grid, each row contains frames sharing the same timestep, while each column contains frames from the same viewpoint. One stream performs viewpoint updates on columns, and the other stream performs temporal updates on rows. After each diffusion transformer layer, a newly designed synchronization layer exchanges information between the two token streams. We propose two implementations of the synchronization layer, using either hard or soft synchronization.This feedforward architecture improves upon previous work in three ways: higher inference speed, enhanced visual quality (measured by FVD, CLIP, and VideoScore), and improved temporal and viewpoint consistency (measured by VideoScore, GIM-Confidence, and Dust3R-Confidence).

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

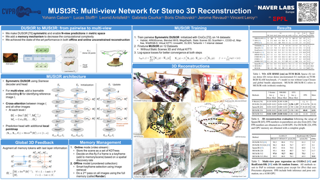

Stereo matching recovers depth from image correspondences. Existing methods struggle to handle ill-posed regions with limited matching cues, such as occlusions and textureless areas. To address this, we propose MonSter, a novel method that leverages the complementary strengths of monocular depth estimation and stereo matching. MonSter integrates monocular depth and stereo matching into a dual-branch architecture to iteratively improve each other. Confidence-based guidance adaptively selects reliable stereo cues for monodepth scale-shift recovery, and utilizes explicit monocular depth priors to enhance stereo matching at ill-posed regions. Such iterative mutual enhancement enables MonSter to evolve monodepth priors from coarse object-level structures to pixel-level geometry, fully unlocking the potential of stereo matching. As shown in Fig.2, MonSter ranks 1st across five most commonly used leaderboards --- SceneFlow, KITTI 2012, KITTI 2015, Middlebury, and ETH3D. Achieving up to 49.5% improvements over the previous best method (Bad 1.0 on ETH3D). Comprehensive analysis verifies the effectiveness of MonSter in ill-posed regions. In terms of zero-shot generalization, MonSter significantly and consistently outperforms state-of-the-art methods across the board. Code will be released upon acceptance.

|

|

Highlight

|

Poster

[ ExHall D ]

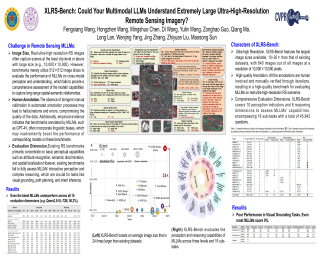

Abstract

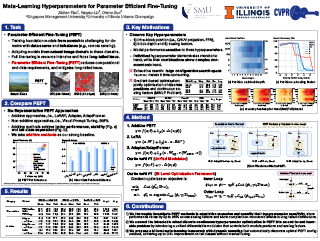

Training large foundation models of remote-sensing (RS) images is almost impossible due to the limited and long-tailed data problems. Fine-tuning natural image pre-trained models on RS images is a straightforward solution. To reduce computational costs and improve performance on tail classes, existing methods apply parameter-efficient fine-tuning (PEFT) techniques, such as LoRA and AdaptFormer. However, we observe that fixed hyperparameters -- such as intra-layer positions, layer depth, and scaling factors, can considerably hinder PEFT performance, as fine-tuning on RS images proves highly sensitive to these settings. To address this, we propose MetaPEFT, a method incorporating adaptive scalers that dynamically adjust module influence during fine-tuning. MetaPEFT dynamically adjusts three key factors of PEFT on RS images: module insertion, layer selection, and module-wise learning rates, which collectively control the influence of PEFT modules across the network. We conduct extensive experiments on three transfer-learning scenarios and five datasets. The results show that MetaPEFT achieves state-of-the-art performance in cross-spectral adaptation, requiring only a small amount of trainable parameters and improving tail-class accuracy significantly. Our code is available in the supplementary materials for review.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

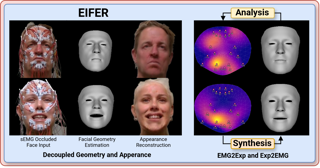

The relationship between muscle activity and resulting facial expressions is crucial for various fields, including psychology, medicine, and entertainment.The synchronous recording of facial mimicry and muscular activity via surface electromyography (sEMG) provides a unique window into these complex dynamics.Unfortunately, existing methods for facial analysis cannot handle electrode occlusion, rendering them ineffective.Even with occlusion-free reference images of the same person, variations in expression intensity and execution are unmatchable.Our electromyography-informed facial expression reconstruction (EIFER) approach is a novel method to restore faces under sEMG occlusion faithfully in an adversarial manner.We decouple facial geometry and visual appearance (e.g., skin texture, lighting, electrodes) by combining a 3D Morphable Model (3DMM) with neural unpaired image-to-image translation via reference recordings.Then, EIFER learns a bidirectional mapping between 3DMM expression parameters and muscle activity, establishing correspondence between the two domains. We validate the effectiveness of our approach through experiments on a dataset of synchronized sEMG recordings and facial mimicry, demonstrating faithful geometry and appearance reconstruction.Further, we synthesize expressions based on muscle activity and how observed expressions can predict dynamic muscle activity.Consequently, EIFER introduces a new paradigm for facial electromyography, which could be extended to other forms of multi-modal face recordings.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

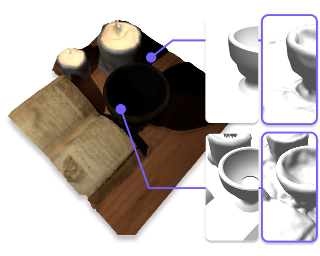

Recent image-to-3D reconstruction models have greatly advanced geometry generation, but they still struggle to faithfully generate realistic appearance. To address this, we introduce ARM, a novel method that reconstructs high-quality 3D meshes and realistic appearance from sparse-view images. The core of ARM lies in decoupling geometry from appearance, processing appearance within the UV texture space. Unlike previous methods, ARM improves texture quality by explicitly back-projecting measurements onto the texture map and processing them in a UV space module with a global receptive field. To resolve ambiguities between material and illumination in input images, ARM introduces a material prior that encodes semantic appearance information, enhancing the robustness of appearance decomposition. Trained on just 8 H100 GPUs, ARM outperforms existing methods both quantitatively and qualitatively.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

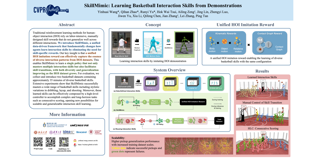

Traditional reinforcement learning methods for interaction skills rely on labor-intensive, manually designed rewards that do not generalize well across different skills. Inspired by how humans learn from demonstrations, we propose ISMimic, the first data-driven approach that Mimics both human and ball motions to learn diverse Interaction Skills, e.g., a wide variety of challenging basketball skills. ISMimic employs a unified configuration to learn diverse interaction skills from human-ball motion datasets, with skill diversity and generalization improving as the dataset grows. This approach allows training a single policy to learn multiple interaction skills and allows smooth skill switching. The interaction skills acquired by ISMimic can be easily reused by a high-level controller to accomplish high-level tasks. To evaluate our approach, we introduce two basketball datasets that collectively contain about 35 minutes of diverse basketball skills. Experiments show that our method can effectively acquire various reusable basketball skills including diverse styles of dribbling, layup, and shooting. Video results and 3D visualization are available at https://ismimic.github.io

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

Building Free-Viewpoint Videos in a streaming manner offers the advantage of rapid responsiveness compared to offline training methods, greatly enhancing user experience. However, current streaming approaches face challenges of high per-frame reconstruction time (10s+) and error accumulation, limiting their broader application. In this paper, we propose Instant Gaussian Stream (IGS), a fast and generalizable streaming framework, to address these issues. First, we introduce a generalized Anchor-driven Gaussian Motion Network, which projects multi-view 2D motion features into 3D space, using anchor points to drive the motion of all Gaussians. This generalized Network generates the motion of Gaussians for each target frame in the time required for a single inference. Second, we propose a Key-frame-guided Streaming Strategy that refines each key frame, enabling accurate reconstruction of temporally complex scenes while mitigating error accumulation. We conducted extensive in-domain and cross-domain evaluations, demonstrating that our approach can achieve streaming with a average per-frame reconstruction time of 2s+, alongside a enhancement in view synthesis quality.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

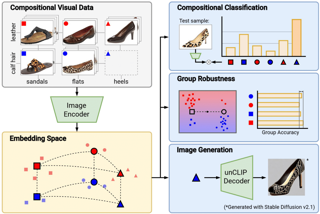

While image-text foundation models have succeeded across diverse downstream tasks, they still face challenges in the presence of spurious correlations between the input and label. To address this issue, we propose a simple three-step approach--Project-Probe-Aggregate (PPA)--that enables parameter-efficient fine-tuning for foundation models without relying on group annotations. Building upon the failure-based debiasing scheme, our method, PPA, improves its two key components: minority samples identification and the robust training algorithm.Specifically, we first train biased classifiers by projecting image features onto the nullspace of class proxies from text encoders. Next, we infer group labels using the biased classifier and probe group targets with prior correction. Finally, we aggregate group weights of each class to produce the debiased classifier. Our theoretical analysis shows that our PPA enhances minority group identification and is Bayes optimal for minimizing the balanced group error, mitigating spurious correlations. Extensive experimental results confirm the effectiveness of our PPA: it outperforms the state-of-the-art by an average worst-group accuracy while requiring less than 0.01% tunable parameters without training group labels.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

Vision Transformers (ViTs) have shown remarkable performance and scalability across various computer vision tasks. Currently, to apply single-scale ViTs to image segmentation, existing methods adopt a convolutional adapter to generate multi-scale features, a pixel decoder to fuse these features, and a Transformer decoder that leverages them to make predictions. In this paper, we show that the inductive biases introduced by these task-specific components can instead be learned by the ViT itself, given sufficiently large models and extensive pre-training. Leveraging these findings, we introduce the Encoder-only Mask Transformer, which repurposes the plain ViT architecture to conduct image segmentation. Using large models and strong pre-training, EoMT obtains a segmentation performance similar to state-of-the-art models that use task-specific components. At the same time, EoMT is significantly faster than these methods due to its architectural simplicity, e.g., up to 4$\times$ faster using ViT-L. Across a range of model sizes, EoMT demonstrates an optimal balance between segmentation performance and inference speed, suggesting that compute resources are better allocated to scaling the ViT itself rather than adding architectural complexity. Code will be released upon acceptance.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

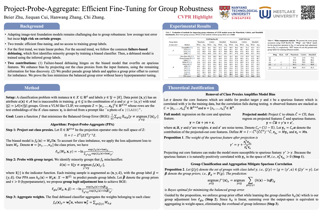

Personalized portrait synthesis, essential in domains like social entertainment, has recently made significant progress. Person-wise fine-tuning based methods, such as LoRA and DreamBooth, can produce photorealistic outputs but need training on individual samples, consuming time and resources and posing an unstable risk. Adapter based techniques such as IP-Adapter freeze the foundational model parameters and employ a plug-in architecture to enable zero-shot inference, but they often exhibit a lack of naturalness and authenticity, which are not to be overlooked in portrait synthesis tasks. In this paper, we introduce a parameter-efficient adaptive generation method, namely HyperLoRA, that uses an adaptive plug-in network to generate LoRA weights, merging the superior performance of LoRA with the zero-shot capability of adapter scheme. Through our carefully designed network structure and training strategy, we achieve zero-shot personalized portrait generation (supporting both single and multiple image inputs) with high photorealism, fidelity, and editability.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

Dataset distillation has emerged as a powerful approach for reducing data requirements in deep learning. Among various methods, distribution matching-based approaches stand out for their balance of computational efficiency and strong performance. However, existing distance metrics used in distribution matching often fail to accurately capture distributional differences, leading to unreliable measures of discrepancy. In this paper, we reformulate dataset distillation as a minmax optimization problem and introduce Neural Characteristic Function Discrepancy (NCFD), a comprehensive and theoretically grounded metric for measuring distributional differences. NCFD leverages the Characteristic Function (CF) to encapsulate full distributional information, employing a neural network to optimize the sampling strategy for the CF's frequency arguments, thereby maximizing the discrepancy to enhance distance estimation. Simultaneously, we minimize the difference between real and synthetic data under this optimized NCFD measure. Our approach, termed Neural Characteristic Function Matching (NCFM), inherently aligns the phase and amplitude of neural features in the complex plane for both real and synthetic data, achieving a balance between realism and diversity in synthetic samples. Experiments demonstrate that our method achieves significant performance gains over state-of-the-art methods on both low- and high-resolution datasets. Notably, we achieve a 20.5\% accuracy boost on ImageSquawk. Our method also reduces GPU memory …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

Given a video and a set of input object masks, an omnimatte method aims to decompose the video into semantically meaningful layers containing individual objects along with their associated effects, such as shadows and reflections.Existing omnimatte methods assume a static background or accurate pose and depth estimation and produce poor decompositions when these assumptions are violated. Furthermore, due to the lack of generative prior on natural videos, existing methods cannot complete dynamic occluded regions.We present a novel generative layered video decomposition framework to address the omnimatte problem. Our method does not assume a stationary scene or require camera pose or depth information and produces clean, complete layers, including convincing completions of occluded dynamic regions. Our core idea is to train a video diffusion model to identify and remove scene effects caused by a specific object. We show that this model can be finetuned from an existing video inpainting model with a small, carefully curated dataset, anddemonstrate high-quality decompositions and editing results for a wide range of casually captured videos containing soft shadows, glossy reflections, splashing water, and more.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

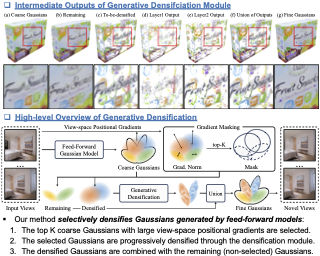

Point management is critical for optimizing 3D Gaussian Splatting models, as point initiation (e.g., via structure from motion) is often distributionally inappropriate. Typically, Adaptive Density Control (ADC) algorithm is adopted, leveraging view-averaged gradient magnitude thresholding for point densification, opacity thresholding for pruning, and regular all-points opacity reset. We reveal that this strategy is limited in tackling intricate/special image regions (e.g., transparent) due to inability of identifying all 3D zones requiring point densification, and lacking an appropriate mechanism to handle ill-conditioned points with negative impacts (e.g., occlusion due to false high opacity).To address these limitations, we propose a Localized Point Management(LPM) strategy, capable of identifying those error-contributing zones in greatest need for both point addition and geometry calibration. Zone identification is achieved by leveraging the underlying multiview geometry constraints, subject to image rendering errors. We apply point densification in the identified zones and then reset the opacity of the points in front of these regions, creating a new opportunity to correct poorly conditioned points. Serving as a versatile plugin, LPM can be seamlessly integrated into existing static 3D and dynamic 4D Gaussian Splatting models with minimal additional cost.Experimental evaluations validate the efficacy of our LPM in boosting a variety of existing …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

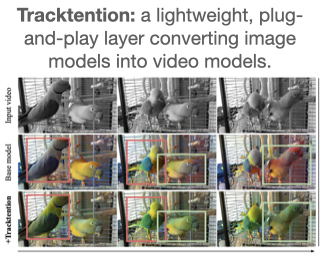

Temporal consistency is critical in video prediction. Traditional methods, such as temporal attention mechanisms and 3D convolutions, often struggle with significant object movements and fail to capture long-range temporal dependencies in dynamic scenes. To address these limitations, we propose the Tracktention Layer, a novel architectural component that explicitly integrates motion information using point tracks — sequences of corresponding points across frames. By incorporating these motion cues, the Tracktention Layer enhances temporal alignment and effectively handles complex object motions, maintaining consistent feature representations over time. Our approach is computationally efficient and can be seamlessly integrated into existing models, such as Vision Transformers, with minimal modification. Empirical evaluations on standard video estimation benchmarks demonstrate that models augmented with the Tracktention Layer exhibit significantly improved temporal consistency compared to baseline models.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

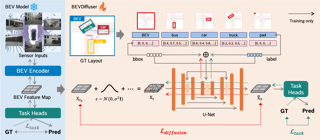

Bird's-eye-view (BEV) representations play a crucial role in autonomous driving tasks. Despite recent advancements in BEV generation, inherent noise, stemming from sensor limitations and the learning process, remains largely unaddressed, resulting in suboptimal BEV representations that adversely impact the performance of downstream tasks. To address this, we propose BEVDiffuser, a novel diffusion model that effectively denoises BEV feature maps using the ground-truth object layout as guidance. BEVDiffuser can be operated in a plug-and-play manner during training time to enhance existing BEV models without requiring any architectural modifications. Extensive experiments on the challenging nuScenes dataset demonstrate BEVDiffuser's exceptional denoising and generation capabilities, which enable significant enhancement to existing BEV models, as evidenced by notable improvements of 12.3\% in mAP and 10.1\% in NDS achieved for 3D object detection without introducing additional computational complexity. Moreover, substantial improvements in long-tail object detection and under challenging weather and lighting conditions further validate BEVDiffuser's effectiveness in denoising and enhancing BEV representations.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

3D plane reconstruction from a single image is a crucial yet challenging topic in 3D computer vision. Previous state-of-the-art (SOTA) methods have focused on training their system on a single dataset from either indoor or outdoor domain, limiting their generalizability across diverse testing data. In this work, we introduce a novel framework dubbed ZeroPlane, a Transformer-based model targeting zero-shot 3D plane detection and reconstruction from a single image, over diverse domains and environments. To enable data-driving models on multiple domains, we have curated a large-scale (over 14 datasets and 560,000 images), high-resolution, densely-annotated planar benchmark from various indoor and outdoor scenes. To address the challenge of achieving desirable planar geometry on multi-dataset training, we propose to disentangle the representation of plane normal and offset, and employ an exemplar-guided, classification-then-regression paradigm to learn plane and offset respectively. Additionally, we employ advanced backbones as image encoder, and present an effective pixel-geometry-enhanced plane embedding module to further facilitate planar reconstruction. Extensive experiments across multiple zero-shot evaluation datasets have demonstrated that our approach significantly outperforms previous methods on both reconstruction accuracy and generalizability, especially over in-the-wild data. We will release all of the labeled data, code and models upon the acceptance of this paper.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

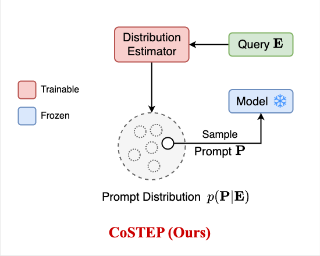

Recent advancements in prompt-based learning have significantly advanced image and video class-incremental learning. However, the prompts learned by these methods often fail to capture the diverse and informative characteristics of videos, and struggle to generalize effectively to future tasks and classes. To address these challenges, this paper proposes modeling the distribution of space-time prompts conditioned on the input video using a diffusion model. This generative approach allows the proposed model to naturally handle the diverse characteristics of videos, leading to more robust prompt learning and enhanced generalization capabilities. Additionally, we develop a mechanism that transfers the token relationship modeling capabilities of a pre-trained image transformer to spatio-temporal modeling for videos. Our approach has been thoroughly evaluated across four established benchmarks, showing remarkable improvements over existing state-of-the-art methods in video class-incremental learning.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

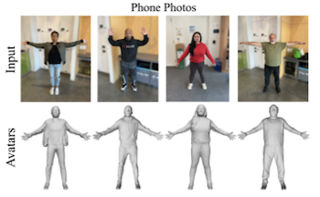

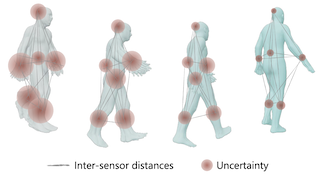

We present EgoAllo, a system for human motion estimation from a head-mounted device. Using only egocentric SLAM poses and images, EgoAllo guides sampling from a conditional diffusion model to estimate 3D body pose, height, and hand parameters that capture a device wearer's actions in the allocentric coordinate frame of the scene. To achieve this, our key insight is in representation: we propose spatial and temporal invariance criteria for improving model performance, from which we derive a head motion conditioning parameterization that improves estimation by up to 18%. We also show how the bodies estimated by our system can improve hand estimation: the resulting kinematic and temporal constraints can reduce world-frame errors in single-frame estimates by 40%.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

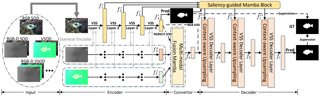

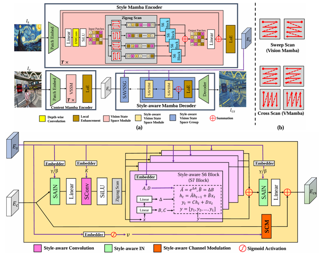

Existing salient object detection (SOD) models primarily resort to convolutional neural networks (CNNs) and Transformers. However, the limited receptive fields of CNNs and quadratic computational complexity of transformers both constrain the performance of current models on discovering attention-grabbing objects. The emerging state space model, namely Mamba, has demonstrated its potential to balance global receptive fields and computational complexity. Therefore, we propose a novel unified framework based on the pure Mamba architecture, dubbed saliency Mamba (Samba), to flexibly handle general SOD tasks, including RGB/RGB-D/RGB-T SOD, video SOD (VSOD), and RGB-D VSOD. Specifically, we rethink Mamba's scanning strategy from the perspective of SOD, and identify the importance of maintaining spatial continuity of salient patches within scanning sequences. Based on this, we propose a saliency-guided Mamba block (SGMB), incorporating a spatial neighboring scanning (SNS) algorithm to preserve spatial continuity of salient patches. Additionally, we propose a context-aware upsampling (CAU) method to promote hierarchical feature alignment and aggregations by modeling contextual dependencies. Experimental results show that our Samba outperforms existing methods across five SOD tasks on 21 datasets with lower computational cost, confirming the superiority of introducing Mamba to the SOD areas. Our code will be made publicly available.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

In text-to-image (T2I) generation applications, negative embeddings have proven to be a simple yet effective approach for enhancing generation quality. Typically, these negative embeddings are derived from user-defined negative prompts, which, while being functional, are not necessarily optimal.In this paper, we introduce ReNeg, an end-to-end method designed to learn improved Negative embeddings guided by a Reward model. We employ a reward feedback learning framework and integrate classifier-free guidance (CFG) into the training process, which was previously utilized only during inference, thus enabling the effective learning of negative embeddings.We also propose two strategies for learning both global and per-sample negative embeddings. Extensive experiments show that the learned negative embedding significantly outperforms null-text and handcrafted counterparts, achieving substantial improvements in human preference alignment. Additionally, the negative embedding learned within the same text embedding space exhibits strong generalization capabilities.For example, using the same CLIP text encoder, the negative embedding learned on SD1.5 can be seamlessly transferred to text-to-image or even text-to-video models such as ControlNet, ZeroScope, and VideCrafter2, resulting in consistent performance improvements across the board. Code and learned negative embeddings will be released.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

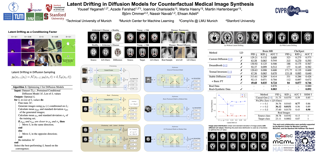

Generalist vision language models (VLMs) have made significant strides in computer vision, but they fall short in specialized fields like healthcare, where expert knowledge is essential. Current large multimodal models like Gemini and GPT-4o are insufficient for medical tasks due to their reliance on memorized internet knowledge rather than the nuanced expertise required in healthcare. Meanwhile, existing medical VLMs (e.g. Med-Gemini) often lack expert consultation as part of their design, and many rely on outdated, static datasets that were not created with modern, large deep learning models in mind. VLMs are usually trained in three stages: vision pre-training, vision-language pre-training, and instruction fine-tuning (IFT). IFT has been typically applied using a mixture of generic and healthcare data. In contrast, we propose that for medical VLMs, a fourth stage of specialized IFT is necessary, which focuses on medical data and includes information from domain expert models. Domain expert models developed for medical use are crucial because they are specifically trained for certain clinical tasks, e.g. to detect tumors and classify abnormalities through segmentation and classification, which learn fine-grained features of medical data$-$features that are often too intricate for a VLM to capture effectively. This paper introduces a new framework, VILA-M3, for …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

Identity-preserving text-to-video (IPT2V) generation aims to create high-fidelity videos with consistent human identity. It is an important task in video generation but remains an open problem for generative models. This paper pushes the technical frontier of IPT2V in two directions that have not been resolved in the literature: (1) A tuning-free pipeline without tedious case-by-case finetuning, and (2) A frequency-aware heuristic identity-preserving Diffusion Transformer (DiT)-based control scheme. To achieve these goals, we propose **ConsisID**, a tuning-free DiT-based controllable IPT2V model to keep human-**id**entity **consis**tent in the generated video. Inspired by prior findings in frequency analysis of vision/diffusion transformers, it employs identity-control signals in the frequency domain, where facial features can be decomposed into low-frequency global features (e.g., profile, proportions) and high-frequency intrinsic features (e.g., identity markers that remain unaffected by pose changes). First, from a low-frequency perspective, we introduce a global facial extractor, which encodes the reference image and facial key points into a latent space, generating features enriched with low-frequency information. These features are then integrated into the shallow layers of the network to alleviate training challenges associated with DiT. Second, from a high-frequency perspective, we design a local facial extractor to capture high-frequency details and inject them into …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

Existing text-to-image (T2I) diffusion models face several limitations, including large model sizes, slow runtime, and low-quality generation on mobile devices. This paper aims to address all of these challenges by developing an extremely small and fast T2I model that generates high-resolution and high-quality images on mobile platforms. We propose several techniques to achieve this goal. First, we systematically examine the design choices of the network architecture to reduce model parameters and latency, while ensuring high-quality generation. Second, to further improve generation quality, we employ cross-architecture knowledge distillation from a much larger model, using a multi-level approach to guide the training of our model from scratch. Third, we enable a few-step generation by integrating adversarial guidance with knowledge distillation. Our model, for the first time, demonstrates the generation of 1024x1024 px images on a mobile device in 1.2 to 2.3 seconds. On ImageNet-1K, our model, with only 372M parameters, achieves an FID of 2.06 for 256x256 px generation. On T2I benchmarks (i.e., GenEval and DPG-Bench), our model with merely 379M parameters surpasses large-scale models with billions of parameters at a significantly smaller size (e.g., 7x smaller than SDXL, 14x smaller than IF-XL).

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

As an effective approach to equip models with multi-task capabilities without additional training, model merging has garnered significant attention. However, existing merging methods face challenges of redundant parameter conflicts and the excessive storage burden of fine-tuned parameters. In this work, through controlled experiments, we reveal that for fine-tuned task vectors, only those parameters with magnitudes above a certain threshold contribute positively to the task, exhibiting a pulse-like characteristic. We then attempt leveraging this pulse-like characteristic to binarize the task vectors and reduce storage overhead. Further controlled experiments show that the binarized task vectors incur almost no decrease in fine-tuning and merging performance, and even exhibit stronger performance improvements as the proportion of redundant parameters increases. Based on these insights, we propose Task Switch (T-Switch), which decomposes task vectors into three components: 1) an activation switch instantiated by a binarized mask vector, 2) a polarity switch instantiated by a binarized sign vector, and 3) a scaling knob instantiated by a scalar coefficient. By storing task vectors in a binarized form, T-Switch alleviates parameter conflicts while ensuring efficient task parameter storage. Furthermore, to enable automated switch combination in T-Switch, we further introduce Auto-Switch, which enables training-free switch combination via retrieval from a …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

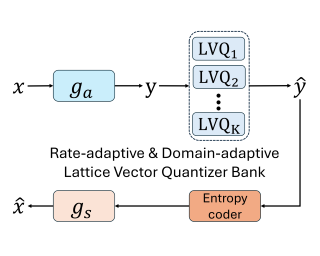

Recent research has explored integrating lattice vector quantization (LVQ) into learned image compression models. Due to its more efficient Voronoi covering of vector space than scalar quantization (SQ), LVQ achieves better rate-distortion (R-D) performance than SQ, while still retaining the low complexity advantage of SQ. However, existing LVQ-based methods have two shortcomings: 1) lack of a multirate coding mode, hence incapable to operate at different rates; 2) the use of a fixed lattice basis, hence nonadaptive to changing source distributions. To overcome these shortcomings, we propose a novel adaptive LVQ method, which is the first among LVQ-based methods to achieve both rate and domain adaptations. By scaling the lattice basis vector, our method can adjust the density of lattice points to achieve various bit rate targets, achieving superior R-D performance to current SQ-based variable rate models. Additionally, by using a learned invertible linear transformation between two different input domains, we can reshape the predefined lattice cell to better represent the target domain, further improving the R-D performance. To our knowledge, this paper represents the first attempt to propose a unified solution for rate adaptation and domain adaptation through quantizer design.

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

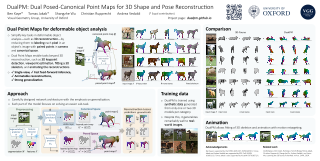

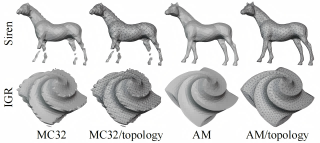

The choice of data representation is a key factor in the success of deep learning in geometric tasks. For instance, DUSt3R has recently introduced the concept of viewpoint-invariant point maps, generalizing depth prediction, and showing that one can reduce all the key problems in the 3D reconstruction of static scenes to predicting such point maps. In this paper, we develop an analogous concept for a very different problem, namely, the reconstruction of the 3D shape and pose of deformable objects. To this end, we introduce the Dual Point Maps (DualPM), where a pair of point maps is extracted from the same image, one associating pixels to their 3D locations on the object, and the other to a canonical version of the object at rest pose. We also extend point maps to amodal reconstruction, seeing through self-occlusions to obtain the complete shape of the object. We show that 3D reconstruction and 3D pose estimation reduce to the prediction of the DualPMs. We demonstrate empirically that this representation is a good target for a deep network to predict; specifically, we consider modelling horses, showing that DualPMs can be trained purely on 3D synthetic data, consisting of a single model of a horse, …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract

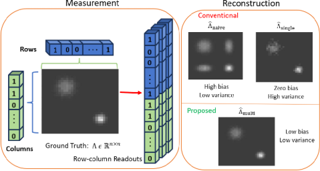

Readout multiplexing is a promising solution to overcome hardware limitations and data bottlenecks in imaging with single-photon detectors. Conventional multiplexed readout processing creates an upper bound on photon counts at a very fine time scale, where measurements with multiple detected photons must either be discarded or allowed to introduce significant bias. We formulate multiphoton coincidence resolution as an inverse imaging problem and introduce a solution framework to probabilistically resolve the spatial locations of photon incidences. Specifically, we develop a theoretical abstraction of row--column multiplexing and a model of photon events that make readouts ambiguous. Using this, we propose a novel estimator that spatially resolves up to four coincident photons. Our estimator achieves a 3 to 4 dB increase in the peak signal-to-noise ratio of image reconstruction compared to traditional methods at higher incidence photon fluxes. Additionally, this method achieves a ~4 reduction in the required number of readout frames to achieve the same mean-squared error as other methods. Finally, our solution matches the Cramer-Rao bound for detection probability estimation for a wider range of incident flux values compared to conventional methods. While demonstrated for a specific detector type and readout architecture, this method can be extended to more general multiplexing …

|

|

Highlight

|

Poster

[ ExHall D ]

Abstract